Why Human-Led Threat Intelligence Still Matters in a World of Artificial Intelligence

On a daily basis, cyber threat intelligence analysts are leveraging cutting-edge Artificial intelligence (AI) and automation tools to sift through vast volumes of data. AI has emerged as a formidable tool, almost ally, enhancing the ability to detect, analyse and respond to threats with speed and scale. Its prowess in processing vast datasets and identifying patterns has forever changed the Cyber Threat Intelligence (CTI) domain. These tools, from machine learning-driven threat feeds to anomaly-detecting algorithms, have become indispensable for speed and scale.

First hand experience shows that Human-Led intelligence remains an indispensable component in producing effective and actionable intelligence. In an era of AI-led cyber analytics, it’s the human insight in planning, analysis, dissemination, and feedback that ensures no context is missed and no important nuance is overlooked.

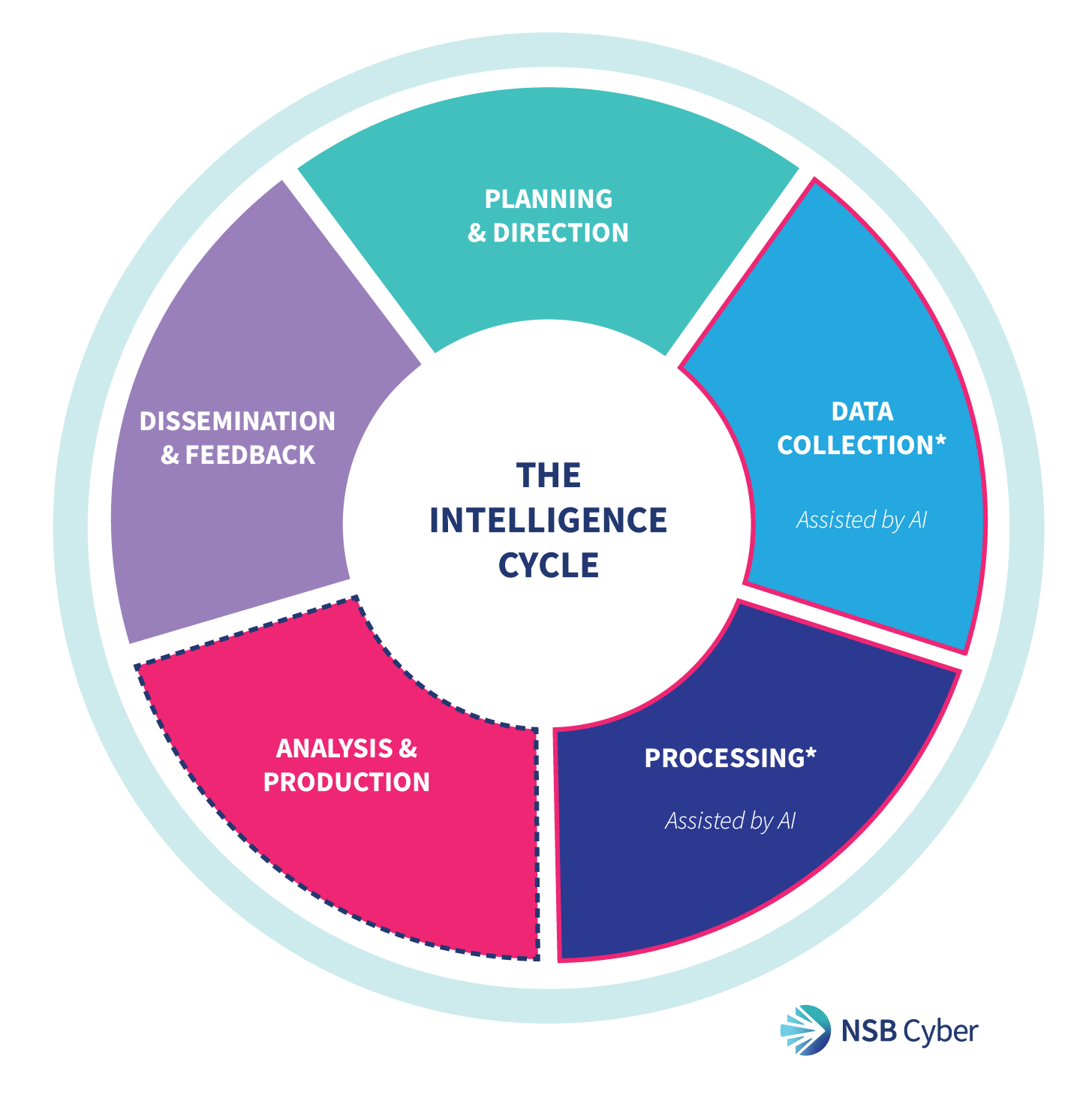

The Intelligence Cycle Is Inherently Human

At its core, the foundation of Cyber Threat Intelligence remains the intelligence cycle: Direction, Collection, Processing, Analysis and Dissemination

Whilst AI and automation have streamlined Collection, Processing and in some sense the Analysis through enabling the faster ingestion of threat feeds, log data and data from the cybercrime ecosystem, Direction, Analysis and Dissemination still fundamentally require human interpretation, prioritisation and communication. Deciding what to hunt, weighing the evidence and turning it into clear guidance for decision-makers and stakeholders relies on judgement, context and communication that machines do not possess.

In the Direction phase, analysts define priorities based on organisational risks, such as safeguarding sensitive customer data, and ensuring alignment with business objectives.

During Analysis, they contextualise anomalies, like unusual network traffic, to determine if they pose a genuine threat to critical operations.

In Dissemination, analysts translate complex findings into clear, tailored reports for stakeholders, executives or response teams, ensuring actionable insights directly address organisational priorities with precision and clarity.

Although AI can assist, it often struggles significantly with these critical sections of the intelligence cycle, and unfortunately, many other areas, potentially leading to erroneous analysis, wrongful assumptions and poorly finalised intelligence products.

What AI Still Can't Do In Intelligence

AI excels at identifying patterns, but it struggles with nuance. It can detect PowerShell anomalies, failed logins, or unusual beaconing, but understanding why those patterns matter and what they indicate, is still the job of a human analyst.

Take, for example, the Volt Typhoon campaign:

A Chinese state-sponsored actor quietly targeting U.S. critical infrastructure. Although automated alerts may detect anomalous PowerShell use, living-of-the-land techniques, or persistent beaconing, recognising that the activity aligns with state-sponsored objectives aimed at pre-positioning in Operational Technology (OT) environments for potential escalation in tensions and war absolutely requires deep analytical reasoning.

Multiple public analysis of Volt Typhoon’s Tactics, Techniques and Procedures (TTPs) reinforced the importance of analyst-driven assessments in:

Identifying pre-exploitation narratives

Discerning intent

Providing Geopolitical context

Similarly, when a threat actor’s communications and platforms are breached and leaked (remember BlackBasta and LockBit?), automation and AI can aggregate and correlate some of the patterns, trends and important indicators.

But connecting:

A ransom note to familiar affiliates,

A Bitcoin trail to known wallets and servers, lining these clues up against past campaigns and unreported victims, and testing the links against Telegram and dark-web chatter.

That’s a human task, tools can surface the breadcrumbs, but judging their credibility and translating them into actionable recommendations still calls for an experienced CTI analyst.

AI Is a tool: not a replacement

This is without actively addressing areas where AI platforms struggle, such as AI hallucinations and erroneous consultation of external sources. Hallucinations generally occur when AI generates false or misleading information, risking flawed cyber threat assessments. Similarly, unreliable external source validation can introduce inaccuracies, undermining the resulting intelligence product.

AI-led intelligence further struggles with contextual understanding; in other words, AI and machine-learning algorithms can’t read the room. When Canberra and Beijing swap sharp words, or a G20 summit ends in finger-pointing, the dashboards just keep counting alerts. A human analyst knows those headlines are the prelude to fresh probes against think-tanks, academic institutions and defence contractors. In more complex and volatile environments, this could lead to perfect intelligence failures.

Across cyber units, AI is helping to streamline cyber attack analysis and give security experts more time to act. However, it does not inherently replace humans. Namely, regardless of its increased effectiveness, organisations and clients will require something or someone to be accountable for the decisions, assessments and resulting products, which cannot be fulfilled by a machine (yet, at least).

AI algorithms can be biased, and analysts are needed to ensure that intelligence gathering and analysis are conducted ethically and responsibly. Whilst this blog does not attempt to provide a dissertation on the ethics and potential issues within the intelligence practice nor does it attempt to absolve human biases and analytical pitfalls.

Recent cases involving emerging AI tools have proven that they can absorb and replicate the same inequities and skewed judgements embedded in their training data, even when sensitive attributes such as gender, race or sexual orientation are removed. This further reinforces certain lacking characteristics of AI in Cyber Threat Intelligence.

Intelligence Is More Than Indicators

Cyber threat intelligence is not just about collecting IOCs and IP addresses, it's about producing assessments, guiding defensive strategy, and informing executive decisions through actionable recommendations.

Human-led CTI teams are adept at synthesising data across all levels including :

Technical

Strategic and;

Operational

They build narratives, construct threat models, and advise on proactive mitigation.

Intelligence also concerns qualitative intelligence including adversary capability/intent assessments, motivations, and targeting patterns which still depends heavily on human expertise. This is especially true when assessing emerging threats that challenge static indicators, such as influence operations or multi-stage supply chain attacks.

Intelligence is useful not because it lists known threats or observables, but because it helps organisations make better decisions. What to patch, who to monitor, where to allocate resources, and how to respond to an emerging campaign. These are not technical decisions; they are operational and strategic, which is why human insight remains essential.

Current Landscape: The Human-AI Synergy

Current CTI has evolved from basic threat feeds to sophisticated intelligence platforms with multiple cross-integrations that provide more than just contextual information about threats.

The (not so recent) integration of Artificial Intelligence in the intelligence process (and cyber security at large) has facilitated the betterment of the some of the core processes of intelligence, but on a more granular perspective, artificial intelligence has allowed CTI to become less reactive, especially in an environment where malicious actors are becoming more advanced, frequent and dynamic.

The key takeaway for any cyber intelligence program is that AI works best as an assistant, not an autonomous replacement.

Human AI collaboration creates a force multiplier effect

AI handles the grunt work and data deluge, and humans provide strategic guidance, validation, and deep analysis. When done right, this pairing dramatically improves both efficiency and efficacy.

For example, a firm that uses an AI-based platform to automatically ingest and score millions of threat indicators each day. This automation might flag 50 new phishing domains or malware hashes. But the organisation’s analysts review these outputs, correlate them with ongoing campaigns (confirmation), and decide which ones warrant escalation to clients. The AI reduces noise and toil, while human analysts ensure focus on the truly important threats; almost representing a perfect synergy.

Keeping the Analyst in Intelligence

Despite the hype, Cyber Threat Intelligence is not a fully automated or AI-produced pipeline, nor should it be. The “intelligence” in threat intelligence ultimately comes from people: the analysts who can think like adversaries, the experts who understand the clients’ needs and requirements, and the researchers who turn data into actionable knowledge. AI has become an invaluable partner in this work, performing triage and highlighting findings at speeds no human could. It helps close the gap in a time of overwhelming threat data and shortage of skilled analysts, also preventing the exacerbation of analytical biases. But it is the human-led insight that gives the data meaning and ensures that critical threats do not slip through the cracks.

As threat intelligence analysts, we've learned that our value isn’t in sifting raw data, the machines do that well enough, but in asking the right questions and interpreting the answers. We provide the intuition, experience, and strategic thinking that direct the AI and then make sense of its output. By weaving human judgment into the AI-driven process, we get the best of both worlds: faster detection and richer understanding. Against ever-evolving cyber threats, maintaining this human-AI partnership is not just ideal, it’s absolutely critical. After all, it takes a human to outwit a human and today’s threat actors are very human adversaries, often augmented by AI themselves.

Our defence, therefore, must be AI-augmented, human-led intelligence. This means letting AI handle the heavy lifting, while we analysts focus on what we do best: staying curious, thinking critically, and outsmarting the attackers with insight that only comes from human intelligence.

We’ve prepared the Ransomware Q2 2025 Report, packed with frontline insights, to help you understand today’s threats and prepare for what’s next.